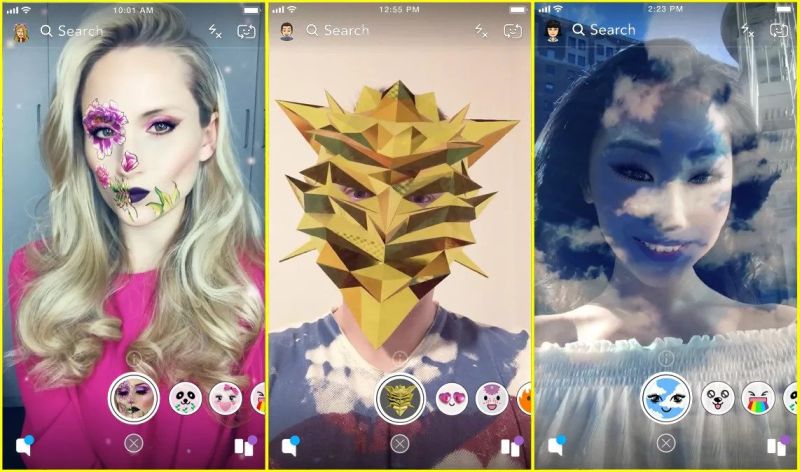

Snap’s creative approach is to actively promote augmented reality to the masses, primarily through a young and creative audience that is already starting to use AR as a tool for self-expression. Avid Snapchat users will not lie: the masks in the app are incredible. With just one tap on your Snapchat screen, you can instantly make your selfie sexier, tougher, or funnier.

You point the camera at yourself, activate the mask’s function, and in one click, your photo with these puppy ears and nose is simply amazing. In one swipe, the waterfalls came out of your eyes. But, surely, you’ve wondered how Snapchat filters are created and work on a technical level?

Masks’ creation can be based on the machine learning algorithms, so don’t miss a chance to visit LITSLINK to find out about machine learning and leverage the power of ML to benefit your business.

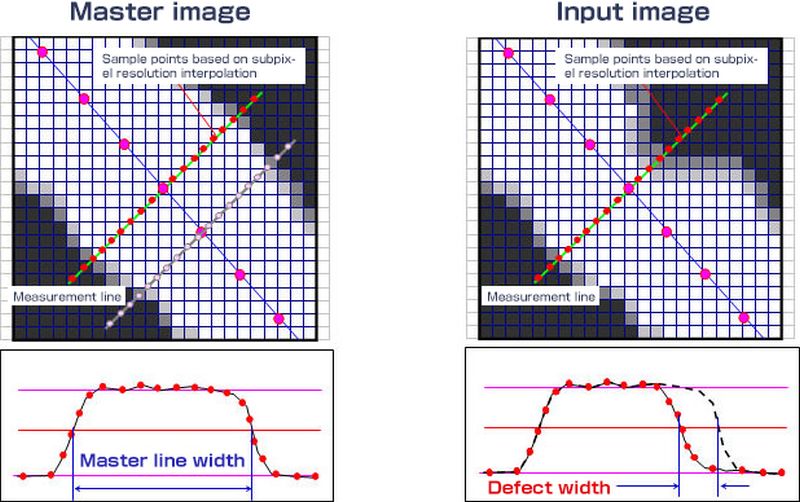

Image Processing Technology

The Snapchat app allows users to apply different masks and filters to change facial features during video chats and photos. Snapchat filters are developed using Image Processing technology, which performs mathematical operations on each pixel in the image.

All modern face recognition technologies use systems that learn using test images. For training, bases with images containing faces and not containing faces are used. Each fragment of the image under study is characterized as a vector of features, with the help of which classifiers (algorithms for determining an object in a frame) determine whether this part of the image is a face or not.

Face Detection

Within the framework of this point, a frame is used from a video stream (from a cam, or from a finished video clip) or a static image is loaded. The resulting image is processed by the face localization function. The face localization function performs the following actions: finding the rectangles enclosing faces; finding the approximate contours of faces in these rectangles; finding the angles of rotation of faces relative to the horizontal (vertical). The same function is used to find the angle of rotation of faces relative to the horizontal (vertical axis).

A photo of the face is taken and analysis begins. Most facial recognition solutions use 2D images instead of 3D volumetric images, as they can more easily match 2D photos with publicly available photos or photos in a database. Each face is made up of distinguishable landmarks or anchor points. Each human face has 80 nodal points.

Given an input image or video frame, the technology recognizes the human face, output its bounding box using rectangle coordinates (X, Y, width, and height), and creates a pyramid representation of that image. A sliding window method is used for each entry in the pyramid. When this is done with a pyramid, a non-maximum suppression operation (NMS) is performed to discard the folded rectangles.

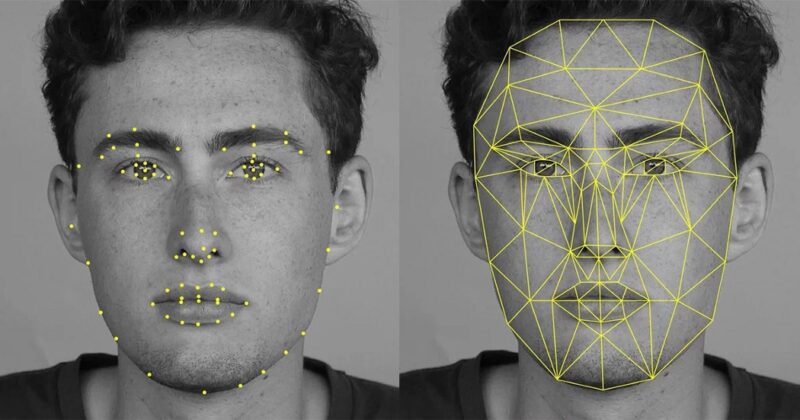

Facial Landmarks

Facial recognition software analyzes nodes like the distance between your eyes or the shape of your cheekbones. During this step, coordinates for each facial feature are defined and presented in points (X, Y).

The purpose of determining landmarks is to find the points of main face features and determine the location of the face in the image. After receiving the location, the faces look for key contours:

- Face contour

- Left eye

- Right eye

- Left eyebrow

- Right eyebrow

- Left pupil

- Right pupil

- Nose

- Lips

Each of these contours is an array of points on a plane. The system finds pivot points on the face that define individual characteristics. The algorithm for calculating the characteristics is different for each system and is the developers’ main secret. Previously, the main reference point for algorithms was the eyes. Still, the algorithms have evolved and began to take into account at least 68 points on the face (located along the contour of the face, determine the position and shape of the chin, eyes, nose, and mouth, the distance between them).

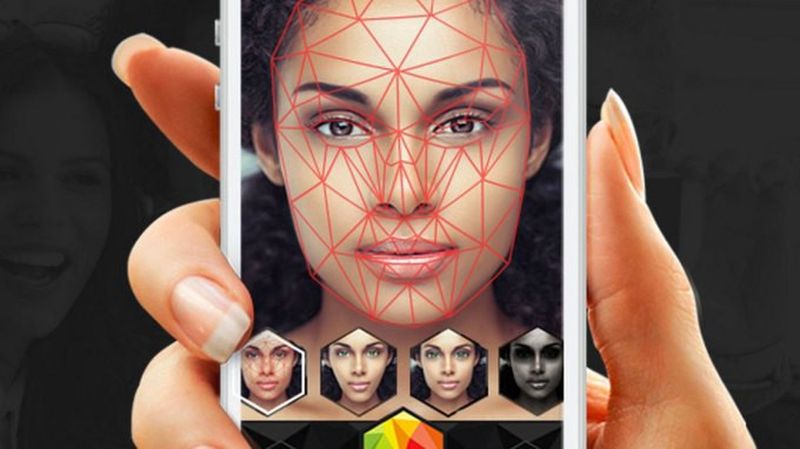

Image Processing using Active Shape Model

When the face has been detected, Snapchat go even further and apply Active Shape Model to know your facial features. Snapchat uses the ASM which primary goal is not a face recognition, but the exact localization of the face and anthropometric points in the image for further processing.

In almost all algorithms, an obligatory step that precedes classification is alignment, which means aligning the face image to the frontal position relative to the camera or bringing a set of faces (for example, in a training sample for training a classifier) to a single coordinate system.

To implement this stage, localization on the image of anthropometric points characteristic of all faces is necessary. Most often, these are the centers of the pupils or the corners of the eyes. In order to reduce computational costs for real-time systems, developers allocate no more than ten such points. ASMs are designed to localize these anthropometric points in the facial image accurately.

Lens Studio Tool

Snap allowed users to create custom filter masks based on the Lens Studio tool. Previously, the program made it possible to come up with only augmented reality objects, but now Snapchat has released seven new templates for creating virtual masks. In addition, the social network launched the Official Creator Program, which will allow the most creative users to get access to technical support, additional promotion, and also give the opportunity to test new features and templates before others.

To draw an AR mask, the user needs to open the camera in the Snapchat app and click on the Create button in the AR bar at the bottom of the screen. Users can then draw any visuals that Snapchat converts to AR elements. They can be drawn both on a selfie and on an image taken through the main camera.

Further Developments

Snap has announced a series of updates to its augmented reality toolkit. Their developers will get a voice search for effects, access to machine learning in Lens Studio, as well as a new information collection system that turns user photos into three-dimensional maps. Snap wants developers to add their neural networks to its platform.

SnapML allows users to integrate trained neural networks and augment the world in more sophisticated ways. Where does Snap take these experiments? Probably the most functional augmented reality platform in the world.